- February 20, 2017

- Joe

- knowledge

- No Comments

The cloud concept is meant to be ethereal, floating, that we don’t need to worry about it. It has worked! However, cloud computing is very real, which means it has a real physical presence and cost. So, how does the cloud exist?

Floating around

Server

In reality the cloud is a bunch of servers, located in a data center (more on that later). Specifically, a server is a computer built … well to serve. The main characteristic of a server is that’s build to be on 24/7 and it needs to be very fast. So, while it may have the same architecture as any PC, its components are focused on performance and durability. Things have changed though, today those servers don’t need to be as well built as before, but they still need to be running 24/7.

Rack

All these servers are stacked one over another enclosed in a rack, which then are connected to the power and network. As you might imagine, all these computers put together so tightly generate a considerable amount of heat. This in turn requires that the installation has a good way of dealing with this heat. Because of the previous situation, cooling the place where the rack is, became also essential. So, a good A/C is needed.

Data Center

We have the servers put in a rack, which also requires a big A/C and networking connection. This place or location is called a Data Center, a location on which one puts a lot of servers together. They may or not work in tandem.

As you might imagine, putting all this together cost money in real state, electricity and networking. Google had come a long way since their first rack, and started to build their own data centers. They quickly came to the conclusion that having more efficient data center gave them an edge in competitiveness. For this reason Google focused -almost a decade ago- into making those places as efficient as possible, specially energetically. To start they got rid of the A/C. Their data centers still have a ventilation system for the servers to be cool, however is not as cold as it was before. Many other companies fallowed suit, to an extend that when Facebook started to build their own data centers, they were build with the few knowledge that Google leak out, but it was based on this advance that the more energy efficient a data center is, the better.

Size does matter

Data centers can be as small as a cubicle within an office and as big as a factory. Because of their needed capacity to serve, data centers from companies like Amazon, Apple, Google, Facebook and Microsoft, tend to be huge! Their data center installations are factory size.

Location, location, location!

We got a basic knowledge of the main variables (the public ones, at least) to locate or to build a data center: cost of the land, access to high network speed and access to electricity. So all three must be there in order to build such an installation. We can even divide this as general purpose Data Center and tailored ones. General purpose Data Center are the ones that can have racks or space rented by different companies. These ones are smaller and generally are located within a city. Such scenario, is very costly, but the convenience to have it at walking or driving distance outweighs the extra cost. On the contrary, tailored ones (like for example Google), are big installations. Under these considerations old factories locations might not be enough to host them, they need to go outhere, therefor these types of data centers are in isolated places.

With such a big installation, then the location tends to be more important. Maybe land is cheap, but there’s no electric or network there. And Vice-versa.

High speed network access is challenging but not impossible. Within reason, it’s possible to bring high speed network to most places. Electricity? that’s the challenge!

You see, a big Data Center (as a factory) can consume easily 10 Mega Watts of power. Legends says that in China, the biggest of them all consumes 150 Mega watts. 10 Mega watts can power around 10 thousands homes (you do the math for 150). So its a lot for just one data center.

But that’s not all! Even if the power is available, it would be preferable for PR, environment and economic reasons that, the electricity that’s going to power the data center, is being generated in the most environmentally friendly way. This complicates deciding the location even more! So is no coincidence that some data centers from Google and Microsoft on the west coast of the US are located in Oregon, close to a hydroelectric power dam.

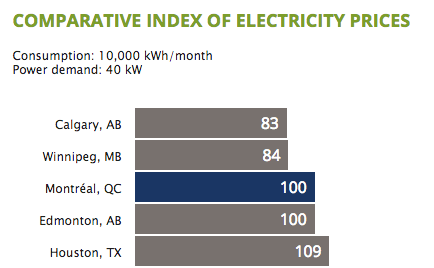

The province of Quebec Canada offers cheap hydroelectric power since decades ago, I had wondered why this wasn’t a hub for data centers. Well, it appears this started to happen slowly but surely. Ericsson, OVH, Amazon and Microsoft have built data centers in the last two years here in Quebec. The province offers cheap and cold -as in polar winds- land, access to high speed network AND low cost hydroelectricity. Low cost, but not the cheapest. See below.

Notice Calgary, Edmonton and Winnipeg have lower or equal prices, however that electricity is being generated with coal, a deal breaker for some. But what about the rest of North American cities?

The only city coming close to Montreal -in cost- is Chicago, and they don’t have the cheap real state Quebec has. Yes, that’s 1.5$ million CAD per month, at least! Do you now start to appreciate how electricity cost AND method of generating it becomes a decisive factor in the location of the data center?

Can a Data Center be green?

With all the logistical challenges involved in building a data center, one would be incline to think that the fact that they are built at all it’s an achievement into itself (it is!), so making them green might be after thought. Besides, any efficiency gain is also good for the environment. That’s the route many big players have taken, building the data centers and then compensating their energy consumption by buying renewable power elsewhere, resulting in a carbon neutral data center.

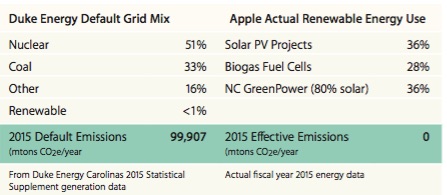

However Apple computer took this matter to heart and one of their data centers in Maiden, North Carolina (see Fig 1.). According to Apple this data center runs 100% in renewable energy: solar + bio fuel. This installation complies with the paramount: high-speed network access, low cost land and low cost electricity. On the contrary the cleaner energy source for this installation is nuclear. As always the devil is in the details:

You can see right away the location factor; the data center is plug-in to the Duke Energy grid. This data center is able to run by itself without being connected to the grid. And when the sun is shining it can give extra energy to the grid. But we all know that the sun doesn’t shine always during the day, or at night. The facility doesn’t store electricity (i.e. no batteries for after dark labor). Apple overprovisioned the site with solar photocells, so even when it’s cloudy, there’s enough electricity in order for it to function. While apple doesn’t specify on the matter, we make an educated guess that when the sun shines, it produces excess energy to be saved for later use at night, in the form of credit to the grid. For example, today I (the data center) generated an extra 10 MW of power during the day, so at night you (the grid) will give it back to me. Added with the Biogas fuel cells, this is how Apple can claim the installation is running 100% on renewables. Hey! They didn’t say it was 24/7 running on renewables!

We ignored tax issues and local tax charges, which can also have an influence on the location decision, but definitely not as much as the previous ones discussed. So now you know, when someones talks about cloud computing, we can figuratively think of it as floating in the sky, but they live firm on the grounds of a data center. And they do cost a lot of money!